Cochin University of Science and Techology (CUST) 2005-7th Sem B.Tech Information Technology , Artificial Neural Network - Question Paper

Tech. Degree VII Semester Examination, November 2005

VU -05 - 070(C)

IT/CS/EC/CE/ME/SE/EE/EI/EB 705 C ARTIFICIAL NEURAL NETWORKS (2002 Admissions)

3 Hour* Maximum Marks: 100

(a) Design Hebbian neuron/net to implement the logical 2 input AND function using bipolar input-output patterns. (8)

(b) Give the sigmoidal function used in artificial neural networks and their significance. Plot them. (6)

(c) Define and explain the error function for delta rule. (6)

OR

(a) What are the assumptions made in artificial neural networks? (6)

(b) Define and plot the identity function, binary step function, binary sigmoidal function, and bipolar sigmoidal functioo used in neural networks. (S)

(c) Define and explain the generalized delta rule. How is it different from the original form of the delta rule? (6)

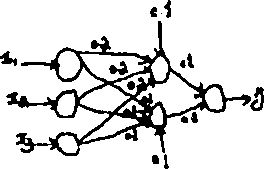

(a) Find (he new weights when the neural network shown below is prese4nted with the input pattern (I 1 1) and the target output is 1. Use a learning rate of 0.1 and

bipolar sigmoidal activation function, <P(*) = " ~ (10)

1 +e

(b) Explain the outer product rule. (5)

(c) When the training in a network is stopped? (S)

OR

(a) define and explain the error signal terms in the output and hidden layers of a

two layer network. (6)

(b) What arc the features and limitations of using a momentum term in back propagation training algorithm? (6)

(c) How the initial weight and the learning rate parameter is selected in BP algorithm? Also compare per*pattern and per-epoch learning. (8)

(a) Consider a Kohenen net with two cluster (output) units and five input units. The Weight vectors for the output units are W,[l. 0.8,0.6,0.4,0.2] and

Wj*{l, 0.5.1,0.5,1). Use the square of the Euclidean distance to find the winning neuron for the input pattern X*[0.5, 1.0.5,0,0.5). Find the new weights for the winning unit Assume learning rale as 0J. (10)

(b) Explain one typical application of counter propagation network. (10)

OK

(a) Explain how training is earned out in Kohenen and Gross berg layers in a counter propagation network. (10)

(b) Compare counter propagation and feed forward type neural networks. (5)

(c) How do we compute a normalized vector? Normalize X*(0.S, 1,0.5,0,0.5]. <5)

2

VII (a) Explain how training is applied to Boltzman machine. (6)

(b) Explain what do you mean by simulate annealing. (6)

(c) Write short note on simulated annealing. (8>

OR

VIII (a) Explain the motivation to apply statistical methods in ANN training. (6)

(b) Write short note Cauchy training. (6)

(c) Explain how ANN can be applied to solve any one optimization problem. (8)

X (a) Using the outer product construction method, design a Bop field network lo store

the patterns. A{*l,+!,-!), B-(-l.-H,-l|, and C[-l,-I,+IJ. (S)

(b) Explain how data is stored and retrieved in an ART* I structure. (I)

(c) Explain how an associative memory is different from ordinary memory. (4)

OR

X (a) Calculate the weights ofa 2x2 BAM to store the following pairs of input-output patterns.

<X,(+I. +1. -I), +1. -I. + 1]} and {Xrf+I. -I. +l|. YH+I.-I. *!, -IJ>. ()

(b) Explainbowdataisstorcdandrelnevedinafl ART*I structure. (6)

(c) Explain mutation and cross ovtr operations in genctic algorithm. (6)

|

Attachment: |

| Earning: Approval pending. |