Punjab University 2008-3rd Sem B.E Information Technology COMMUNICATION THEORY - Question Paper

BE third SEMESTER

COMMUNICATION THEORY

2128 Sr *1no* 29 59 B.E.( IT) 3rd Semester IT-313: COMMUNICATION THEORY Time allowed: 3 hours Max. Marks: 100 Note: Attempt any five questions, selecting at least two questions from each of Part A and Part B._

Part A

fJDraw the full Wave rectified waveform f(t) = cos (t), find A, B, C, D in the expression: ' [20]

f(t) = Af 1 + B cos2t -C cos 4t + D cos 6t +..........]

use : sin{(2n - \)%!2) = (-l)n+1 and sin{(2n + 1) n 12} ~ (-l)n

O-iFor the exponential function p(x) = a [exp(-b | x |)], b>0. [20]

X/betermine:

]. Draw the function 2. First moment

2. Second moment 3. Variance Distribution

4. Function relation between a and b for which p(x) is realizable. . _

Q3(a) An ideal low pass filter is given by: [10+10]

H,(co) = Ke -jC!>t0 |co| < coc

Find the impulse response of its equivalent bandpass filter.

(b) Discuss Optimum Filters.

Q4C(a) Determine the Fourier Transform of single r.f pulse, given by: [10+10]

= V cos wct -x/2 < t < x/2 - 0 elsewhere

What is the expectation value of sum of not necessarily statistically independent random variables?

Find the variance of sum of two random variables x and y. What will be the result if these random variables are statistically independent?

Part B

Q5(a) Derive an expression for noise figure of cascaded networks. Prove that the noise figure of the first stage has maximum contribution and contribution by the subsequent stages reduces.

(b) What is Interference? List the various types of noises present in Semiconductor Diodes and Transistors. Explain Thermal noise in detail. _______[10+10]

'I Explain Shot Noise in detail.

\{f)fProve that for entropy to be maximum all messages should be equally likely and that the maximum value of entropy is logarithmic of number of messages. .__[10+10]

1). Apply Shannon-Fano coding. Given M=2 [X] = Xi X2 x3 X4 x3 X6

|

X7 1 /fi |

|

fP]= 1/4 1/16 1/16 1/16 1/8 1/4 Find average length of code words, efficiency and redundancy.

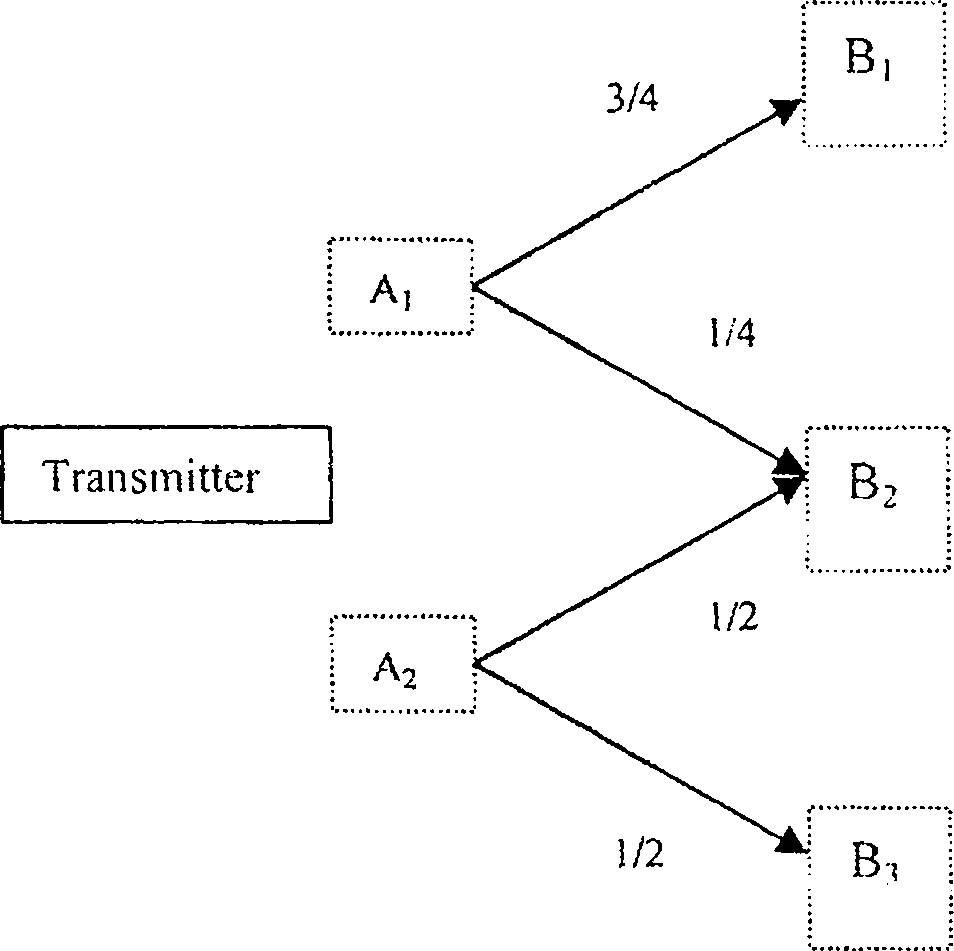

For the channel given, find I (X,Y)

P(A,) = P(A2)==1/2

(k

Receiver

a). What type of probability density gives maximum entropy if the variance of x about mi is cr2 ? Also find this maximum value of entropy. [20]

+ /i& i IO t to +- i i

|

Attachment: |

| Earning: Approval pending. |